Edge Locations, CloudFront, and the AWS Global Network

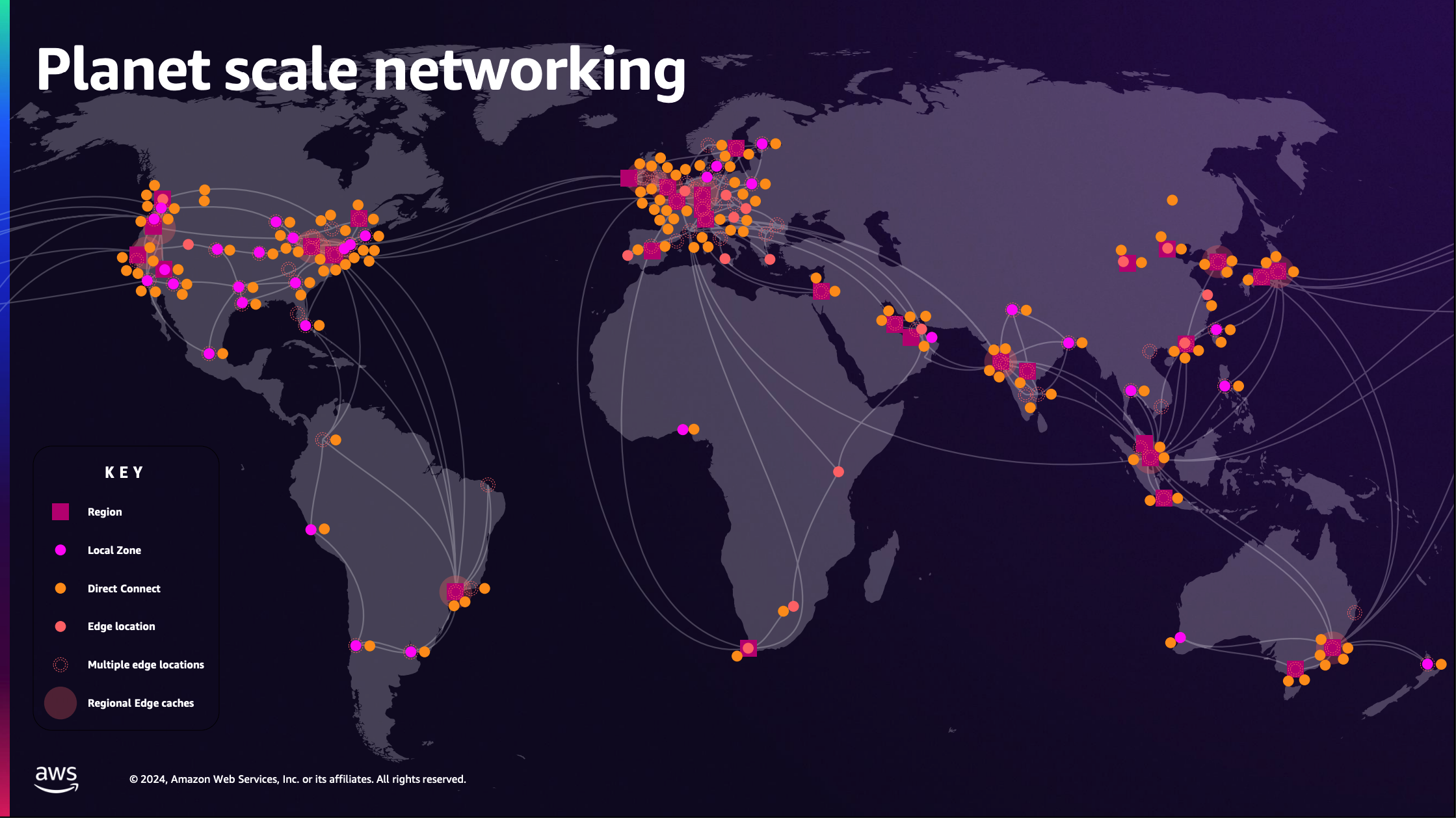

We've talked about AWS regions, clusters of data centers where our main infrastructure lives. But there's another critical part of AWS's global infrastructure we need to understand: edge locations and the global network.

What Are Edge Locations?

They're smaller than full AWS regions, there are a lot more of them, and they're spread out all over the world.

These locations sit at the "edge" of AWS's network, as close as possible to end users.

Regions

Global Edge Network

Region

Edge Location

Embedded POP

Regional Edge Cache

Edge locations come in a few different flavors - Edge Locations/Points of Presence (POPs), Regional Edge Caches, and Embedded POPs.

We really don't need to worry about the differences right now, and I'm just going to call them all "edge locations" from here on out.

The important take away here is that there are a lot of these edge locations and they are spread out all over the world.

Why do Edge Locations Matter?

So what can we do with all these edge locations? Can we deploy an EC2 instance in an edge location?

No, we can't.

Can we deploy an S3 bucket in an edge location?

Nope!

Databases? VPCs? Lambda functions?

No, No, and No (well kind of, but we'll get to that later).

We don't really deploy anything in an edge location. It's more about having a network of locations that make it faster and more reliable to deliver content to users all over the world. A Content Delivery Network.

The AWS Global Backbone Network

AWS has built their own global network of fibre optic that span the globe. They've literally wired up all their regions and edge location with their own private network. Oh, and they're working on tech like hollow-core cables to make it even faster.

This AWS backbone network gives us low latency, low packet loss, and high overall network quality and speed.

There's a whole bunch of amazing things that AWS has done here, and a lot of benefits that we get like enhanced security, and high availability. But one of the easiest things to notice is the speed.

Using the backbone network is usually much faster than using the public internet.

Let's say you have a web server hosted in Mumbai, India and I try to make an HTTP request to that web server from Vancouver, Canada.

When I tested this out and it took 1.52 seconds to receive a basic 100 byte response. That's the time it takes for a single HTTP request to complete at this distance over the public internet.

But what happens if we use the backbone network instead?

Now when I try to access your web server in mumbai, AWS can connect me to my closest edge location, which is right here in Vancvouer. Then AWS directs my request to your web server in Mumbai over the AWS backbone network.

It takes only a third of the time for a round trip to the other side of the world and back. We've reduced the latency from ~1.5 seconds to ~0.5 seconds.

So how do we use this backbone network and all the edge locations?

CloudFront

We don't directly provision the edge locations or backbone network, instead we use services that automatically work on the edge infrastructure. The main one being CloudFront.

CloudFront is AWS's powerful content delivery network (CDN) service that uses all these edge locations to securely deliver static and dynamic content globally with low latency and high transfer speeds

For static content, cloudfront will cache the files at the edge. For dynamic content, it can route traffic effeciently over the backbone network. You can even run small amounts of code directly in the edge locations.

And you don't specify or think about individual edge locations. CloudFront works in all edge locations.

In the previous example, connecting to a server in Mumbai, we would simply add a CloudFront distribution with the EC2 isntance as it's origin. In a few minutes we would have 1/3 of the latency.

Static Content

Let's say you're serving static content, like a static website, from a bucket in Mumbai. No servers or dynamic content in this example, just static content that doesn't change.

Because it's static, it can be easily cached to improve speed even more. So if we put cloudfront in front of the S3 bucket, we can actually get something more like this:

CloudFront will cache the static content inside the edge locations so there's no need to go all the way to the origin in Mumbai every time. Now my request takes under 100ms to get a response.

Anything static, like images, videos, web pages, or other assets, can be cached at the edge locations.

Edge computing

It's not all about static content though. Cloudfront also allows you to run code at the edge locations, through CloudFront Functions and CloudFront Lambda@Edge.

These are smaller and more constrained environments than full servers or even full AWS Lambda functions. However, we can run some amount of logic at the edge, while keeping the main infrastructure like S3 storage, ec2 instances, and databases in a single region.

We'll touch on these a little bit more in the Serverless section.

Summary

In the next part, we will setup CloudFront to serve a static website from an S3 bucket. This is a simple use case, and as we've seen, CloudFront is capable of much more. However, this will give us a chance to see how CloudFront works in practice.

We won't be using CloudFront in many of other parts of this course because I want us to focus on other services directly.

But keep in mind that we can always integrate CloudFront into our AWS infrastructure if we want the edge benefits. Even a basic server running on an EC2 instance can use CloudFront with a VPC origin to utilize the backbone network for better networking.

Remember that CloudFront and the global edge network exists, even when we're not using them.